AI agents are rapidly becoming invaluable aspects of enterprise applications, empowering customer support, data analysis, automation, and making critical decisions. With the increasing capability and complexity of the agents, the need to ensure ethics, alignment, and reliability with business expectations also grows. Agentic Observability lays the foundation for systematic monitoring, evaluation, and enhancement of agents throughout their life cycle.

Amidst the emergence of agentic AI and more complex multi-agent systems driven by LLM, businesses require cutting-edge evaluation frameworks and observability tools for responsible and scalable deployment. Additionally, these tools and frameworks should provide insight and transparency into the workflows of agentic AI, which require agents to dynamically reason, plan, and collaborate with tools and projects.

This blog explores how to measure AI agent success, using established metrics and frameworks. That way, teams can build secure, robust multi-agent systems with confidence, transparency, and regulatory compliance.

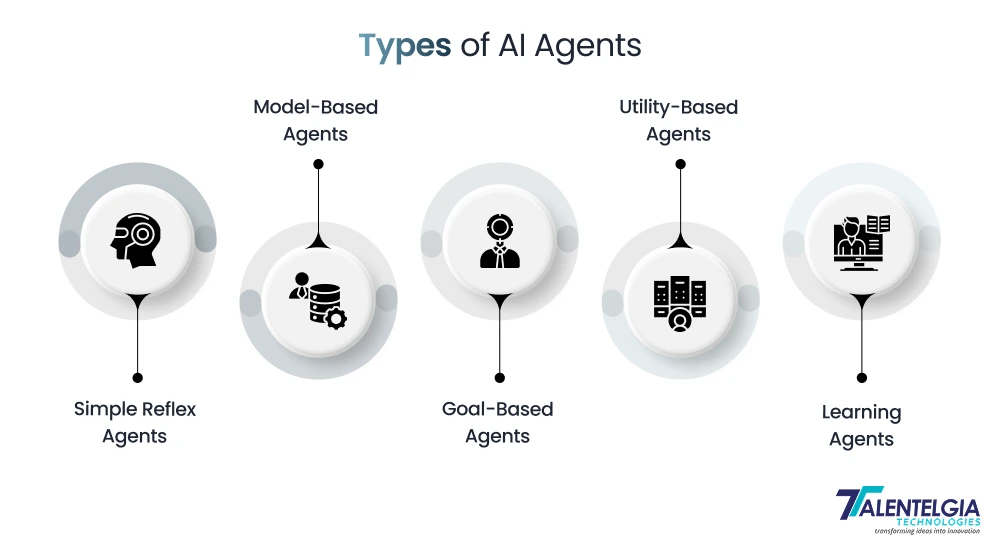

Understanding AI Agents and their Types

An AI agent is a software system or autonomous entity that uses artificial intelligence to perform tasks, make decisions, and interact with users or environments independently or with minimal human intervention.

These agents combine data from their surroundings, past interactions, and domain knowledge to reason, learn, and take actions that achieve specific goals.

Examples range from simple AI chatbots that answer customer queries to advanced autonomous robots navigating complex situations.

There are different types of AI agents based on their capabilities and roles:

- Simple Reflex Agents: Operate on fixed rules responding only to current inputs without memory of past states; useful for straightforward, repetitive tasks like password resets.

- Model-Based Agents: Build internal models to consider past and present information for more informed responses, suitable for dynamic environments.

- Goal-Based Agents: Plan actions to achieve specific objectives, often seen in natural language processing and robotics.

- Utility-Based Agents: Evaluate different outcomes to maximize user benefit, such as personalized recommendation systems.

- Learning Agents: Adapt and improve through experience, refining responses based on new data and feedback.

This spectrum highlights how AI agents vary from reactive systems to sophisticated, adaptive entities capable of complex reasoning and interaction.

Measuring AI Agent Success: KPIs, Success Rates & ROIs

AI agent metrics are invaluable for those developing artificial intelligence systems in 2025. As AI technologies are getting adopted in more sectors, measuring the key performance indicators (KPIs) and return on investments (ROIs) is now critical for AI engineers as well as for product managers. These metrics capture the simplest performance metrics as well as the most sophisticated ethical issues. With the right measurement AI frameworks, technologies can be validated, systems improved, and technology aligned with some defined business goals.

Why Measuring AI Agent Success Matters?

Deploying an AI agent without a clearly defined success measurement is like navigating without a map. With AI investments growing exponentially, organizations must quantify whether these agents deliver value—not just technically, but in business outcomes and customer experience. Measuring success helps stakeholders:

- Ensure the AI agent meets its intended goals effectively and efficiently

- Identify areas needing improvement or retraining

- Optimize resource allocation and reduce operational costs

- Enhance user satisfaction and engagement

- Justify ROI and inform strategic decisions

Clear metrics transform AI projects from experimental technology implementations into trusted tools driving competitive advantage.

Also Read: Agentic AI Use Cases

Business Goals vs. Technical Goals of AI Agents

AI success encompasses both business outcomes and technical achievements. These two dimensions should work in harmony:

- Business Goals: These can include increasing revenue, reducing operational costs, enhancing user engagement, or improving the customer experience.

For example, according to a 2024 Codiste report, organizations that implemented AI agents saw a reduction of up to 40% in customer service costs by automating repetitive tasks. - Technical Goals: These relate to the AI’s performance metrics such as accuracy, latency, robustness, and scalability. High technical performance is necessary but not sufficient without alignment to business impact.

Organizations that tightly align AI technical capabilities with strategic business objectives tend to realize significantly better ROI on AI initiatives.

Importance of Clear Objectives and KPIs

Clear objectives and well-chosen Key Performance Indicators (KPIs) are critical for articulating and measuring AI agent success.

According to Virtasant statistics, 70% of business leaders emphasize that clear KPIs are vital for sustained AI success, as they provide direction, accountability, and focus to AI projects.

Key aspects include:

- Specificity: Success criteria should be well-defined and specific, avoiding vague goals. For example, instead of “improve chatbot performance,” specify “achieve an F1 score of 0.85 for intent classification on a 10,000 sample test set”.

- Measurability: Use quantitative KPIs such as task completion rate, accuracy, response time, uptime, and user satisfaction scores. Qualitative measures like feedback surveys also provide important insights.

- Achievability: Set realistic targets based on prior benchmarks, research, or domain expertise. Unrealistic goals can frustrate teams and misrepresent agent capabilities.

- Relevance: KPIs must align tightly with the agent’s purpose and stakeholders’ priorities. For example, citation accuracy is crucial for medical AI but less so for casual virtual assistants.

- Time-bound: Define timelines for assessment to track progress and enable continuous improvement.

By following frameworks like SMART (Specific, Measurable, Achievable, Relevant, Time-bound), organizations can ensure that their success criteria are actionable and aligned with business needs.

Essential Performance Indicators For Measuring an AI Agent’s Success

Measuring an AI Agent’s success relies heavily on choosing the right Key Performance Indicators (KPIs). These KPIs help to transform abstract concepts of “success” into concrete, trackable metrics that reflect how well the AI agent is performing its intended tasks, delivering volume, and meeting business goals. This section elaborates on the most important KPIs, blending technical, operational, and business perspectives for a holistic evaluation.

1. Accuracy & Precision

Accuracy and precision determine how correctly and relevantly the AI agent performs its tasks. Accuracy measures the proportion of correct outputs overall, while precision focuses on how many of those positive outputs are genuinely correct, minimizing false alarms.

Example: In fraud detection, a high precision value prevents legitimate transactions from being wrongfully flagged, which is critical for customer trust.

Significance: Balancing accuracy and precision reduces both false positives and negatives, with commercial AI applications often targeting an F1 score above 0.85 for effective performance.

Key Fact: The F1 score harmonizes precision and recall, providing a useful single metric for performance evaluation in scenarios with imbalanced data.

2. Recall & F1 Score

Recall measures an AI agent’s success in identifying all relevant instances, whereas the F1 score combines recall and precision to give an overall effectiveness measure, especially valuable when precision and recall need to be balanced.

Example: Medical diagnosis AIs prioritize high recall to minimize missed cases, while the F1 score helps gauge overall diagnostic accuracy.

Significance: High recall avoids critical errors of omission, and the F1 score serves as a balanced benchmark in high-stakes domains like healthcare and finance.

Key Fact: An AI’s recall impacts its ability to detect rare but important instances, such as fraudulent transactions or diseases.

3. Response Time & Throughput

These KPIs measure how quickly and efficiently an AI agent processes data and interacts with users or systems. Response time reflects latency for individual tasks, and throughput measures processing capacity over time.

Example: Customer support AI aims for response times under 2 seconds to maintain smooth interactions, while throughput gauges handling of high customer volumes.

Significance: Low latency enhances user experience and system responsiveness; high throughput ensures scalability under demand peaks.

Key Fact: Monitoring throughput alongside response time prevents bottlenecks, maintaining both speed and volume handling efficiency.

4. Task Completion Rates

Task completion rate indicates the proportion of tasks an AI agent successfully accomplishes without human help, reflecting operational autonomy and reliability.

Example: An 85% task completion rate for a virtual assistant signals strong autonomous capability in fulfilling user requests.

Significance: Higher completion rates reduce manual intervention needs, leading to cost savings and increased efficiency.

Key Fact: This metric is critical in evaluating AI systems designed for workflow automation and end-to-end task management.

5. Error Rate & Failure Cases

Tracking error rates and analyzing failure cases reveal how often AI agents produce incorrect or inadequate results, which is essential for diagnosing weaknesses and improving model robustness.

Example: A 15% reduction in error rate after iterative tuning highlights performance improvements and model refinements.

Significance: Error analysis helps uncover bias, detect data drift, and improve reliability while supporting ethical AI practices.

Key Fact: Monitoring failure modes enhances trustworthiness and ensures the AI handles diverse real-world conditions safely.

6. User Satisfaction Score

User satisfaction scores measure how well the AI agent meets user expectations and needs, usually obtained through surveys, ratings, or direct feedback mechanisms.

Example: A chatbot with a Net Promoter Score (NPS) above 60 is typically considered to deliver an excellent user experience.

Significance: Strong user satisfaction encourages continued use and drives positive word-of-mouth, crucial for customer-facing AI solutions.

Key Fact: Qualitative feedback complements quantitative KPIs, helping refine AI designs tailored to user preferences.

7. Adaptability & Learning Rate

Adaptability and learning rate assess how well an AI system improves over time by incorporating new data and feedback, ensuring relevance in dynamic environments.

Example: An AI model that increases accuracy by 7% over weeks through online learning demonstrates strong adaptability.

Significance: These KPIs are vital for maintaining up-to-date performance amid evolving inputs and operational contexts.

Key Fact: Rapid adaptability supports resilience against changing trends, data drift, and emerging user behaviors.

8. Additional Business & Operational KPIs

Beyond technical metrics, business KPIs translate AI performance into financial and operational impacts crucial for justifying investments and strategic alignment.

Example: Achieving a 25% reduction in operational costs through AI automation exemplifies tangible business value.

Significance: Metrics like Return on Investment (ROI), adoption rate, and automation level tie AI efforts to organizational goals.

Key Fact: Linking KPIs to business outcomes fosters executive buy-in and guides resource allocation.

What are the key tools to measure an AI Agent’s Success?

Measuring AI agent success involves using specialized tools that provide rich insights into technical performance, user engagement, and business impact. These tools fall into several categories, each addressing specific measurement needs:

- Analytics Platforms:

Tools like Google Analytics, Tableau, and Power BI track how users engage with AI agents, visualizing KPIs such as usage patterns, task completions, and conversion rates for quick insights into behavior and impact.

- User Feedback Systems:

Platforms such as SurveyMonkey and in-app feedback widgets collect direct user opinions and satisfaction scores, revealing usability issues and improving trust through qualitative insights.

- AI Monitoring Tools:

Solutions like Fiddler AI, MLflow, and Weights & Biases monitor model accuracy, data drift, and bias, sending alerts for performance drops to maintain reliability and compliance.

- Logging Systems:

ELK Stack and Splunk capture detailed logs of system events and errors, enabling root cause analysis and compliance with transparency requirements.

- Experimentation Platforms:

Optimizely and Google Optimize facilitate A/B testing of AI features or updates to validate improvements before full-scale deployment.

- Automation Tools:

Apache Airflow, Kubeflow Pipelines, and AWS Lambda automate data workflows, retraining, and metric reporting, enabling continuous, scalable performance monitoring.

Conclusion

AI agents are not a “one-time-investment” solution. Their real value takes effect when a determined team diligently tracks progress, refines processes, and aligns all KPIs with desired outcomes.

Measuring AI agent success is vital to ensure these systems deliver real value efficiently and reliably. Using the right AI tools and KPIs provides clear insights into performance, user satisfaction, and business impact. Continuous monitoring and evaluation enable organizations to optimize AI agents, build trust, and adapt to changing needs— ultimately driving better outcomes in an evolving AI landscape. Connect with us to know more benefits of incorporating an AI agent in your business ecosystem.

Healthcare App Development Services

Healthcare App Development Services

Real Estate Web Development Services

Real Estate Web Development Services

E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce Development Company

Blockchain E-commerce Development Company

Fintech App Development Services

Fintech App Development Services Fintech Web Development

Fintech Web Development Blockchain Fintech Development Company

Blockchain Fintech Development Company

E-Learning App Development Services

E-Learning App Development Services

Restaurant App Development Company

Restaurant App Development Company

Mobile Game Development Company

Mobile Game Development Company

Travel App Development Company

Travel App Development Company

Automotive Web Design

Automotive Web Design

AI Traffic Management System

AI Traffic Management System

AI Inventory Management Software

AI Inventory Management Software

AI Software Development

AI Software Development  AI Development Company

AI Development Company  AI App Development Services

AI App Development Services  ChatGPT integration services

ChatGPT integration services  AI Integration Services

AI Integration Services  Generative AI Development Services

Generative AI Development Services  Natural Language Processing Company

Natural Language Processing Company Machine Learning Development

Machine Learning Development  Machine learning consulting services

Machine learning consulting services  Blockchain Development

Blockchain Development  Blockchain Software Development

Blockchain Software Development  Smart Contract Development Company

Smart Contract Development Company  NFT Marketplace Development Services

NFT Marketplace Development Services  Asset Tokenization Company

Asset Tokenization Company DeFi Wallet Development Company

DeFi Wallet Development Company Mobile App Development

Mobile App Development  IOS App Development

IOS App Development  Android App Development

Android App Development  Cross-Platform App Development

Cross-Platform App Development  Augmented Reality (AR) App Development

Augmented Reality (AR) App Development  Virtual Reality (VR) App Development

Virtual Reality (VR) App Development  Web App Development

Web App Development  SaaS App Development

SaaS App Development Flutter

Flutter  React Native

React Native  Swift (IOS)

Swift (IOS)  Kotlin (Android)

Kotlin (Android)  Mean Stack Development

Mean Stack Development  AngularJS Development

AngularJS Development  MongoDB Development

MongoDB Development  Nodejs Development

Nodejs Development  Database Development

Database Development Ruby on Rails Development

Ruby on Rails Development Expressjs Development

Expressjs Development  Full Stack Development

Full Stack Development  Web Development Services

Web Development Services  Laravel Development

Laravel Development  LAMP Development

LAMP Development  Custom PHP Development

Custom PHP Development  .Net Development

.Net Development  User Experience Design Services

User Experience Design Services  User Interface Design Services

User Interface Design Services  Automated Testing

Automated Testing  Manual Testing

Manual Testing  Digital Marketing Services

Digital Marketing Services

Ride-Sharing And Taxi Services

Ride-Sharing And Taxi Services Food Delivery Services

Food Delivery Services Grocery Delivery Services

Grocery Delivery Services Transportation And Logistics

Transportation And Logistics Car Wash App

Car Wash App Home Services App

Home Services App ERP Development Services

ERP Development Services CMS Development Services

CMS Development Services LMS Development

LMS Development CRM Development

CRM Development DevOps Development Services

DevOps Development Services AI Business Solutions

AI Business Solutions AI Cloud Solutions

AI Cloud Solutions AI Chatbot Development

AI Chatbot Development API Development

API Development Blockchain Product Development

Blockchain Product Development Cryptocurrency Wallet Development

Cryptocurrency Wallet Development About Talentelgia

About Talentelgia  Our Team

Our Team  Our Culture

Our Culture

Healthcare App Development Services

Healthcare App Development Services Real Estate Web Development Services

Real Estate Web Development Services E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce

Development Company

Blockchain E-commerce

Development Company Fintech App Development Services

Fintech App Development Services Finance Web Development

Finance Web Development Blockchain Fintech

Development Company

Blockchain Fintech

Development Company E-Learning App Development Services

E-Learning App Development Services Restaurant App Development Company

Restaurant App Development Company Mobile Game Development Company

Mobile Game Development Company Travel App Development Company

Travel App Development Company Automotive Web Design

Automotive Web Design AI Traffic Management System

AI Traffic Management System AI Inventory Management Software

AI Inventory Management Software AI Software Development

AI Software Development AI Development Company

AI Development Company ChatGPT integration services

ChatGPT integration services AI Integration Services

AI Integration Services Machine Learning Development

Machine Learning Development Machine learning consulting services

Machine learning consulting services Blockchain Development

Blockchain Development Blockchain Software Development

Blockchain Software Development Smart contract development company

Smart contract development company NFT marketplace development services

NFT marketplace development services IOS App Development

IOS App Development Android App Development

Android App Development Cross-Platform App Development

Cross-Platform App Development Augmented Reality (AR) App

Development

Augmented Reality (AR) App

Development Virtual Reality (VR) App Development

Virtual Reality (VR) App Development Web App Development

Web App Development Flutter

Flutter React

Native

React

Native Swift

(IOS)

Swift

(IOS) Kotlin (Android)

Kotlin (Android) MEAN Stack Development

MEAN Stack Development AngularJS Development

AngularJS Development MongoDB Development

MongoDB Development Nodejs Development

Nodejs Development Database development services

Database development services Ruby on Rails Development services

Ruby on Rails Development services Expressjs Development

Expressjs Development Full Stack Development

Full Stack Development Web Development Services

Web Development Services Laravel Development

Laravel Development LAMP

Development

LAMP

Development Custom PHP Development

Custom PHP Development User Experience Design Services

User Experience Design Services User Interface Design Services

User Interface Design Services Automated Testing

Automated Testing Manual

Testing

Manual

Testing About Talentelgia

About Talentelgia Our Team

Our Team Our Culture

Our Culture

Write us on:

Write us on:  Business queries:

Business queries:  HR:

HR: