World-class enterprises and large organizations are now introduced with outstanding opportunities due to the rapid advancements in the field of Artificial Intelligence (AI), particularly with the emergence of “Generative AI” or simply “Gen AI”, and proprietary “Large Language Models”. LLMs have numerous applications in different industries, including eCommerce, retail, and healthcare, and many more. They can be considered the cornerstone of an enterprise AI strategy since they help companies analyze unstructured data, automate tasks that require significant expertise, and innovate customer experience.

To successfully implement such technologies across your business systems, explore our AI Integration Services designed to align AI capabilities with your existing infrastructure seamlessly.

However, it’s not the model that sets one enterprise apart from another; it is the architecture built around the model. An enterprise LLM architecture will ensure that an AI initiative is an effective growth engine rather than a costly gamble. In this post, we will look into the essentials of enterprise-level LLMs, including their core components, deployment models, and development of bespoke AI technologies.

Understanding the Basics of LLM Technology: Key Components, Benefits & Working

According to a report released by Fortune Business Insights, the global software AI market was valued at $233.46 billion in 2024, which is estimated to reach $1,771.62 billion by the end of 2032 with a CAGR of 29.20% during the forecast period (2025 – 2032). These statistics show that Large Language Models (LLMs) have evolved from being simple experimental chatbots to being an integral part of critical workflows in companies. They streamline various business operations like automating the search of internal knowledge, report generation, customer support, and compliance maintenance.

However, using LLMs in any business environment is not just a matter of using an API. It requires the right procedure, analysis, tailored infrastructure, and privacy protection. So let’s get deeper into this blog and understand the basics of this new LLM technology, explore its various development models, and analyze its benefits and applications.

What are Large Language Models (LLMs)?

Large Language Models (LLMs) are sophisticated AI models that have been trained over massive amounts of data to understand, generate, summarize, and mimic human-like language or behavior. Based on the transformer structure, LLMs learn to recognize how words in a sentence or paragraph relate to one another over long ranges of text, and this prepares them for complex tasks such as writing complex long-form content, answering queries, translating language, and producing original content.

LLMs are the backbone of popular AI applications like ChatGPT, DeepSeeks, Google Gemini, etc., which provide transformational capabilities across customer support, content generation, research, and more. Their ability to understand and predict human context with utmost accuracy will define the future of the human-computer relationship.

Read More :- How To Train LLM?

Key Components of Large Language Models

Large Language Models are primarily built on the transformer architecture, which has revolutionized Natural Language Processing (NLP). The components responsible for their incredible capabilities are:

- Input Text: This is the raw text data that the model processes. It comprises words, sentences, and paragraphs that the model interprets and responds to

- Tokenizer: The tokenizer breaks the input text into smaller units called tokens—these can be whole words, subwords, or even characters. Tokenization converts complex text into manageable pieces that the model can understand.

- Embedding: Each token is then transformed into a high-dimensional numerical vector through embeddings. These vectors capture semantic meaning and contextual relationships between words rather than just their literal appearance.

How Does LLM Work?

To understand the true potential of LLMs, you have to get under the hood to know how the inner systems work. Most LLMs use something called “transformer” architecture, a game-changer in the world of “Deep Learning” that was introduced in 2017. Where the older versions processed language word-by-word, transformers can consider whole sentences and paragraphs at the same time.

- Encoder: The encoder ingests the embedded tokens and generates a contextual representation for each token by understanding its meaning in relation to others in the sequence.

- Attention to mechanism: Attention Mechanism: At the heart of the transformer architecture, attention allows the model to focus on the most relevant parts of the text. It dynamically weighs different tokens based on their importance to generate meaningful context.

- Decoder: The decoder uses the information from the encoder and attention to predict or generate the next token, assembling coherent sentences word by word in response

- Output Text: Finally, the tokens generated by the decoder are converted back into human-readable text, completing the model’s response or task output.

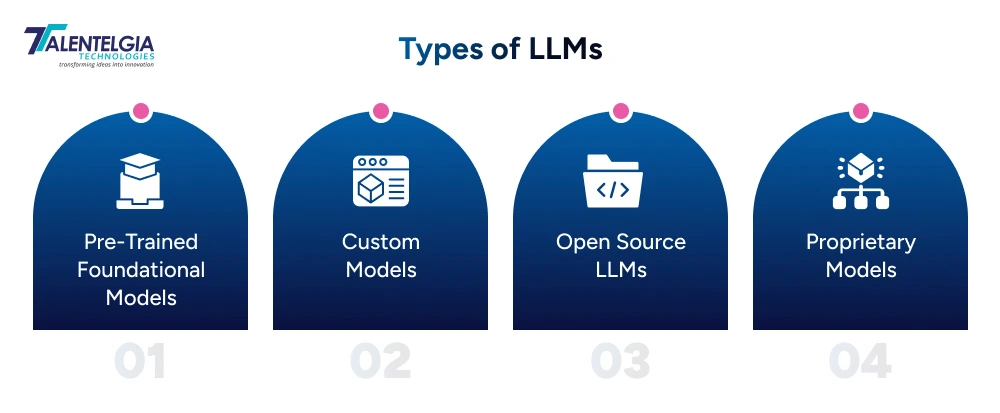

What are The Types of LLMs In the Market?

Large Language Models (LLMs) are advanced AI models trained on extensive collections of text to understand and generate human-like language. They work by learning patterns and structures in language during a rigorous pre-training phase on massive datasets. This enables LLMs to accurately predict words, phrases, or entire sentences in a wide array of contexts.

LLMs come in several types that differ based on their design, openness, and customization options. Understanding these types helps enterprises select the right model for their specific needs.

Pre-Trained Foundational Models

The first phase includes collecting books, articles, websites, and other textual data. These LLMs are first created with billions of words drawn from a variety of textual sources. There is no supervision required in this initial phase. They learn the language complexities such as grammar, dialects, patterns, and contextual meaning. The resulting model is highly versatile and can undertake various tasks or a language.

Few examples include, OpenAI’s ChatGPT, Google Gemini, and Meta’s LlaMA are few popular examples. Companies use to incorporate these applications into various applications due to their solid benchmarks and versatility

Custom Models

Custom models are developed by training existing LLMs on domain-specific data, typically from a specific industry (e.g., healthcare, finance, or legal). This specialization helps make the model more knowledgeable of jargon, context, and nuanced language specific to that domain. “Fine-tuning further increases the accuracy and efficiency of LLMs in enterprise use cases, whereby responses are customized to adhere to business objectives and compliance requirements alike.

Open Source LLMs

Open source LLMs are models whose architecture, training data, and weights are released to the public. Organizations can make changes, retrain, and deploy models without licensing constraints, offering flexibility and ownership. Open source models such as LLaMA, Falcon, or GPT-J have become popular for stimulating innovation, open-source collaboration, and affordable AI. The exchange is that these may be a little more reliant on in-house expertise to roll them out securely and at scale.

Proprietary Models

Proprietary Models, or “Commercial LLMS”, are developed and maintained by for-profit organizations, which deliver them as a service under SLA (e.g., Software-as-a-Service, SaaS) or through API agreements. They also include features or capabilities baked into it , which are pre-optimized infrastructures, securing defense lines, industry compliance certification, and enterprise support. Popular contenders are OpenAI’s GPT, Anthropic’s Claude, and Google’s PaLM. Private LLMs can give you reliability and ease of integration with frequent updates, but they offer little customization abilities and can be expensive.

| Aspect | Pre-trained Foundational Models | Custom Models | Open Source LLMs | Proprietary Models |

| Purpose | Broad general language understanding | Domain-specific customization | Flexible, modifiable solutions | Fully managed and optimized |

| Training Data | Large, diverse, general corpus | Specialized datasets | Varies, community-driven | Controlled, proprietary datasets |

| Accessibility | Available via APIs or model downloads | Built on foundational models | Fully accessible code and weights | Restricted; commercial licensing |

| Cost | Moderate to high based on usage | Potentially high | Often free or low-cost | Substantially higher, subscription-based |

| Control & Customization | Limited customization, targeted fine-tuning | Full customization post base | Full control, open modification | Limited customization |

| Support & Maintenance | Vendor-provided support | Internal or vendor-backed | Community or self-supported | Vendor-managed 24/7 support |

| Security & Compliance | Vendor-managed, varying guarantees | Client-controlled | Depends on deployment | High standards, enterprise ready |

| Examples | GPT-4, LLaMA, PaLM | Fine-tuned GPT for law, medicine | LLaMA, Falcon, GPT-J | OpenAI GPT, Anthropic, Claude |

All Enterprise LLM Deployment Models

A company’s decision on deploying its enterprise LLM model goes beyond simply a technical decision. It reflects a combination of strategic objectives, such as regulatory compliance, budget, and the ability to scale rapidly. Various businesses working in various industries use different development approaches as per their needs, and each model presents its own advantages and disadvantages:

- On-Premises Deployment

This model installs and runs LLMs within an organization’s own data centers. It offers maximum control over hardware, data security, and compliance, making it ideal for industries with strict regulatory demands like healthcare and finance. On-premises deployment enables customization to business processes and minimizes data exposure to external parties.

Examples include companies running private AI clusters or dedicated servers for sensitive workloads.

Key Features:

- Total data and infrastructure control

- High security and compliance adherence

- Customizable model training and inference

- Potentially higher upfront costs and maintenance needs

- Cloud-Based Deployment

In cloud deployment, LLMs are hosted on third-party cloud platforms like AWS, Azure, or Google Cloud, offering scalability and quick startup without heavy initial investments. This model is well-suited for rapid experimentation, diversified workloads, and businesses with lower data sensitivity. Cloud providers often offer managed LLM services with integrated optimization, monitoring, and support.

Key Features:

- On-demand scalability and elasticity

- Reduced operational overhead

- Lower upfront capital expenditure

- Potential concerns about data privacy and compliance

- Hybrid & Virtual Private Cloud (VPC) Deployment

Hybrid models combine both on-premises and cloud resources, allowing enterprises to keep sensitive workloads on-premises while leveraging the cloud for less critical tasks. Virtual Private Clouds provide isolated, secure cloud environments dedicated to a single organization, offering a balance of control and cloud benefits. Platforms like Hugging Face and TrueFoundry support hybrid hosting of open-source and custom LLMs in enterprise-managed VPCs.

Key Features:

- Flexible workload distribution

- Enhanced security with cloud advantages

- Optimizes cost and performance trade-offs

- Suitable for complex regulatory and operational needs

Why Enterprises are Adopting LLMs in 2025?

LLMs possess capabilities such as text generation, summarization of multimodal content, language translation, content rewriting, data classification and categorization, information analysis, and image creation. All of these abilities provide humans with a powerful toolset to augment our creativity and improve problem-solving. Enterprises commonly use LLMs to:

Enhance Automation and Efficiency

LLMs streamline routine language-related tasks such as customer support via chatbots, automated content creation, and data analysis. This automation reduces operational costs and frees up employees to focus on strategic, high-value activities, dramatically boosting productivity.

Generate Actionable Insights

Enterprises leverage LLMs to scan and synthesize massive volumes of unstructured data from sources like social media, customer reviews, and research papers. This capability enables rapid identification of market trends, customer sentiment, and emerging opportunities, informing timely business decisions.

Personalize Customer Experience

LLMs empower businesses to communicate with customers in a more tailored manner. From generating customized marketing messages to providing multilingual support and responsive self-service, they help create engaging, context-aware user interactions that increase loyalty and satisfaction.

Support Complex Decision-Making

Advanced LLMs enable enterprises to perform sophisticated language understanding tasks, including summarizing dense reports, extracting key information, and aiding in domain-specific workflows like legal analysis or medical diagnostics. This enhances expert efficiency and reduces errors.

Accelerate Innovation

With LLMs, enterprises tap into faster research cycles by automating literature reviews, patent analysis, and ideation. They act as creative partners in content generation and technical writing, enabling rapid prototyping and iteration.

Ensure Scalability and Adaptability

LLM-based solutions scale effortlessly to handle varied workloads, adapting quickly to new languages, domains, or use cases. This flexibility supports dynamic business environments and technology evolution without massive reengineering costs.

Monitoring & Maintenance In Enterprise LLM Deployment: Key Focus Areas

Effective monitoring and maintenance are essential to ensure enterprise LLMs deliver consistent, reliable, and cost-efficient performance after deployment. Key focus areas include:

Performance Tracking

Continuous monitoring tracks critical metrics such as response accuracy, latency, throughput, and error rates. Advanced platforms provide real-time dashboards, alerting teams to anomalies or performance drops before impacting users. Semantic monitoring further gauges output relevance, factual accuracy, and safety, enabling rapid detection of issues like hallucinations or bias in the model’s responses. Integrating user feedback helps fine-tune model behavior for ongoing improvement.

Model Updates & Versioning

Enterprises adopt robust version control processes for both model weights and prompt large language models from scratch, developing an engineering process to manage changes safely. Automated retraining pipelines incorporate new data and address shifts in language or domain trends, preventing model degradation. Canary testing and staged rollouts ensure stability, allowing risk mitigation during updates. Clear documentation of model versions and update history maintains transparency and regulatory compliance.

Cost Optimization Strategies

LLMs require considerable computing resources, so tracking and managing operational costs is paramount. Platforms break down costs by token usage, API calls, or user segments, allowing teams to identify high-consumption areas. Techniques like dynamic model routing (running smaller models for simple queries and larger ones for complex tasks), token caching, and prompt optimization reduce expenditures without sacrificing quality. Proactive budgeting and alerting prevent unexpected overruns, turning LLM infrastructure into a predictable, value-generating asset.

Endnote

In summary, the path toward developing a commercial-grade proprietary LLM is based on strategies focusing on specific problem-solving and leveraging agile development approaches, combined with unwavering dedication to user feedback. This is what enables you to not only overcome the challenges associated with developing generative AI but also make sure the final product can actually have an impact in the real world, help advance innovation, and give your business an edge in the digital age.

Healthcare App Development Services

Healthcare App Development Services

Real Estate Web Development Services

Real Estate Web Development Services

E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce Development Company

Blockchain E-commerce Development Company

Fintech App Development Services

Fintech App Development Services Fintech Web Development

Fintech Web Development Blockchain Fintech Development Company

Blockchain Fintech Development Company

E-Learning App Development Services

E-Learning App Development Services

Restaurant App Development Company

Restaurant App Development Company

Mobile Game Development Company

Mobile Game Development Company

Travel App Development Company

Travel App Development Company

Automotive Web Design

Automotive Web Design

AI Traffic Management System

AI Traffic Management System

AI Inventory Management Software

AI Inventory Management Software

AI Software Development

AI Software Development  AI Development Company

AI Development Company  AI App Development Services

AI App Development Services  ChatGPT integration services

ChatGPT integration services  AI Integration Services

AI Integration Services  Generative AI Development Services

Generative AI Development Services  Natural Language Processing Company

Natural Language Processing Company Machine Learning Development

Machine Learning Development  Machine learning consulting services

Machine learning consulting services  Blockchain Development

Blockchain Development  Blockchain Software Development

Blockchain Software Development  Smart Contract Development Company

Smart Contract Development Company  NFT Marketplace Development Services

NFT Marketplace Development Services  Asset Tokenization Company

Asset Tokenization Company DeFi Wallet Development Company

DeFi Wallet Development Company Mobile App Development

Mobile App Development  IOS App Development

IOS App Development  Android App Development

Android App Development  Cross-Platform App Development

Cross-Platform App Development  Augmented Reality (AR) App Development

Augmented Reality (AR) App Development  Virtual Reality (VR) App Development

Virtual Reality (VR) App Development  Web App Development

Web App Development  SaaS App Development

SaaS App Development Flutter

Flutter  React Native

React Native  Swift (IOS)

Swift (IOS)  Kotlin (Android)

Kotlin (Android)  Mean Stack Development

Mean Stack Development  AngularJS Development

AngularJS Development  MongoDB Development

MongoDB Development  Nodejs Development

Nodejs Development  Database Development

Database Development Ruby on Rails Development

Ruby on Rails Development Expressjs Development

Expressjs Development  Full Stack Development

Full Stack Development  Web Development Services

Web Development Services  Laravel Development

Laravel Development  LAMP Development

LAMP Development  Custom PHP Development

Custom PHP Development  .Net Development

.Net Development  User Experience Design Services

User Experience Design Services  User Interface Design Services

User Interface Design Services  Automated Testing

Automated Testing  Manual Testing

Manual Testing  Digital Marketing Services

Digital Marketing Services

Ride-Sharing And Taxi Services

Ride-Sharing And Taxi Services Food Delivery Services

Food Delivery Services Grocery Delivery Services

Grocery Delivery Services Transportation And Logistics

Transportation And Logistics Car Wash App

Car Wash App Home Services App

Home Services App ERP Development Services

ERP Development Services CMS Development Services

CMS Development Services LMS Development

LMS Development CRM Development

CRM Development DevOps Development Services

DevOps Development Services AI Business Solutions

AI Business Solutions AI Cloud Solutions

AI Cloud Solutions AI Chatbot Development

AI Chatbot Development API Development

API Development Blockchain Product Development

Blockchain Product Development Cryptocurrency Wallet Development

Cryptocurrency Wallet Development About Talentelgia

About Talentelgia  Our Team

Our Team  Our Culture

Our Culture

Healthcare App Development Services

Healthcare App Development Services Real Estate Web Development Services

Real Estate Web Development Services E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce

Development Company

Blockchain E-commerce

Development Company Fintech App Development Services

Fintech App Development Services Finance Web Development

Finance Web Development Blockchain Fintech

Development Company

Blockchain Fintech

Development Company E-Learning App Development Services

E-Learning App Development Services Restaurant App Development Company

Restaurant App Development Company Mobile Game Development Company

Mobile Game Development Company Travel App Development Company

Travel App Development Company Automotive Web Design

Automotive Web Design AI Traffic Management System

AI Traffic Management System AI Inventory Management Software

AI Inventory Management Software AI Software Development

AI Software Development AI Development Company

AI Development Company ChatGPT integration services

ChatGPT integration services AI Integration Services

AI Integration Services Machine Learning Development

Machine Learning Development Machine learning consulting services

Machine learning consulting services Blockchain Development

Blockchain Development Blockchain Software Development

Blockchain Software Development Smart contract development company

Smart contract development company NFT marketplace development services

NFT marketplace development services IOS App Development

IOS App Development Android App Development

Android App Development Cross-Platform App Development

Cross-Platform App Development Augmented Reality (AR) App

Development

Augmented Reality (AR) App

Development Virtual Reality (VR) App Development

Virtual Reality (VR) App Development Web App Development

Web App Development Flutter

Flutter React

Native

React

Native Swift

(IOS)

Swift

(IOS) Kotlin (Android)

Kotlin (Android) MEAN Stack Development

MEAN Stack Development AngularJS Development

AngularJS Development MongoDB Development

MongoDB Development Nodejs Development

Nodejs Development Database development services

Database development services Ruby on Rails Development services

Ruby on Rails Development services Expressjs Development

Expressjs Development Full Stack Development

Full Stack Development Web Development Services

Web Development Services Laravel Development

Laravel Development LAMP

Development

LAMP

Development Custom PHP Development

Custom PHP Development User Experience Design Services

User Experience Design Services User Interface Design Services

User Interface Design Services Automated Testing

Automated Testing Manual

Testing

Manual

Testing About Talentelgia

About Talentelgia Our Team

Our Team Our Culture

Our Culture

Write us on:

Write us on:  Business queries:

Business queries:  HR:

HR: