Large language models are reshaping the way we interact with technology, powering everything from intelligent chatbots to advanced data analytics and content generation. While developing these models is a complex task, deploying, managing, and maintaining them effectively is an equally critical challenge. Without proper management, even the most advanced models can underperform or fail to deliver consistent results. This is why understanding the practices and tools that support large language model operations is essential for businesses and developers alike. In this blog, we will explore topics like ‘What is LLMops?’, ‘Major components of LLMops’, ‘LLMops vs MLops’, and others. Let’s get started:

What Is LLMOps?

The term LLMOps (Large Language Model Operations) is used to describe the practices and procedures for effectively managing, deploying, and optimizing LLMs. These models are sophisticated A.I. systems that learn to perform tasks like text generation, translation, and summarization by analyzing huge amounts of digital data, including text, code, and other information, as well as images.

Similar to DevOps and MLOps, LLMOps aims to build automated pipelines for deploying models, monitoring them, and optimizing the deployed versions so that they perform reliably out in the wild and at scale. It is also about governance, compliance, and security, ensuring AI models are both trusted as well as meet organisational and regulatory requirements.

A well-organized LLMOps lifecycle helps organizations to provide AI business solutions seamlessly, upkeeping scalability, efficiency, and reducing operational risks. With data management, continuous monitoring, and updates, LLMOps guarantees that large language models are powerful yet practical, safe, and well-functioning for real-world use.

Benefits Of LLMops

Adopting LLMOps offers organizations utilizing large language models transformative benefits. LLMOps accelerates the NLP applications, AI chatbots to model enhancement and deployment, thereby simplifying the end-to-end AI lifecycle.

Here are the advantages that bring LLMOps to bear for efficient, secure and scalable AI operations:

1. Efficiency

LLMOps makes AI development faster and more efficient by bringing all teams, data scientists, ML engineers, DevOps, and business stakeholders onto a single platform. This unified workflow enhances collaboration and accelerates every stage of the model lifecycle, from data preparation and fine-tuning LLMs to deployment and monitoring.

With automated pipelines, repetitive tasks such as data labeling, testing, and model validation can be handled quickly, allowing teams to focus on innovation. LLMOps also reduces computational costs by optimizing model architectures, hyperparameters, and inference performance. Techniques like model pruning, quantization, and distributed training further enhance model optimization and resource efficiency.

In addition, LLMOps ensures that data pipelines stay clean and consistent. It promotes strong data management practices, from sourcing and preprocessing to real-time updates—so that NLP applications, chatbots, and other AI systems can deliver more accurate and context-aware responses.

2. Risk Reduction

Security and compliance are inherently part and partial to each responsible AI system. Enterprise LLMOps platforms enforce data privacy, manage user access permissions, and secure access to sensitive records/functions. Includes monitoring so that if there are any potential risks or drifts in the data, then you will be able to detect them very early and make sure your systems are not vulnerable.

And greater transparency is also important in order to help businesses comply with data protection regulations. LLMOps also aids ethical AI by building fairness, explainability, and accountability into model design, ensuring that the AI decisions are fair and interpretable as long as they live.

3. Scalability

Scalability is critical as companies scale up their AI efforts. LLMOps reduces the complexity of the scalable model serving with continuous integration, delivery, and tracking in various environments. Whether you have hundreds of chatbot models under management or are deploying NLP-based applications in networks around the world, LLMOps keeps your systems up and running.

Automated pipelines and feedback loops enable seamless updates/real-time model fine-tuning. LLMOps supports thousands of inference requests concurrently with the best performance. Flexibility like this guarantees that workloads can be effectively balanced – even during busy times.

By encouraging better collaboration between data, DevOps, and IT teams, LLMOps enhances release velocity, reduces conflicts, and keeps AI systems running reliably at scale.

4. Enhanced Governance and Compliance

As regulatory requirements tighten, LLMOps provides a robust framework to ensure AI systems remain transparent, accountable, and secure. Enterprise teams can track how model outputs are generated, monitor changes, and maintain detailed logs of model versions, input data, and prompt templates. Access controls safeguard sensitive models and information, while compliance with internal policies and industry-specific standards is enforced automatically. This level of governance is especially crucial for sectors like BFSI, healthcare, and legal, where handling confidential data safely is essential, making LLMOps a reliable solution for enterprise-grade AI operations.

5. Aligned Cross-Functional Collaboration

Enterprise AI initiatives extend beyond data science, involving IT, product, and business teams. LLMOps enables seamless collaboration across these functions, allowing teams to coordinate on prompts, experiments, and deployment plans. Centralized feedback, shared documentation, and standardized processes ensure decisions are data-driven and aligned with business objectives. By fostering cross-functional alignment, LLMOps improves operational efficiency, accelerates model deployment, and ensures enterprise AI projects remain agile and synchronized—highlighting a key advantage over traditional MLOps for large-scale AI operations.

Components Of LLMOps

Powerful linguistic language models (LLMs) effectively require a controlled set combining AI integration services, automation, and best practices from DevOps and MLOps. These are the core elements of LLMOps for enterprise:

Data Management:

The basis for any AI system is high-quality data. LLMOps makes sure that data is neatly ordered, correct and dependable over time. This entails gathering, scrubbing and maintaining data to help fight misconvergence and boost model performance.

Architectural Design:

Strong system architecture is necessary to scale and easily integrate with legacy systems. This involves designing pipelines that support continuous deployment and monitoring/updates while the model remains able to deal with growing workloads efficiently.

Deployment:

Smooth deployment of LLMs is the key to transitioning AI models from development to production. Enterprises can deploy models and reduce errors faster at high reliability with automated pipelines, processes inspired by DevOps.

Data Privacy & Protection:

Security and compliance are essential. LLMOps is focused on mitigating the risk of sensitive data exposure, adherence to governance standards and legal & regulatory compliance. That helps build trust in AI systems and at the same time protects business and user data.

Ethics & Fairness:

AI must be responsible. LLMOps integrates capabilities for bias detection and mitigation, algorithmic decision transparency, and fairness in model/data/user interactions.

LLMOps Vs MLOPS

Although MLOps and LLMOps have a similar basis in terms of automating machine learning processes, they differ greatly in terms of scale, complexity and ethical considerations. MLOps is all about managing traditional machine learning models, while LLMOps apply those to working with large language models which require access to massive computing resources and stronger governance controls.

| Feature | LLMOps | MLOps |

| Scope | Focused on managing, deploying, and optimizing Large Language Models (LLMs). | Deals with the complete lifecycle management of traditional machine learning models. |

| Model Complexity | Involves handling massive models with billions of parameters and complex architecture. | Works with models of varying complexity, from simple regression to deep learning. |

| Resource Management | Requires orchestration of high-end GPUs, distributed systems, and large-scale storage for LLMs. | Aims for cost-effective scalability and efficient resource allocation across standard ML pipelines. |

| Performance Monitoring | Tracks model accuracy, drift, and hallucination tendencies while addressing bias and linguistic consistency. | Monitors performance metrics like precision, recall, and data drift to maintain accuracy over time. |

| Model Training | Retraining involves refining massive datasets and fine-tuning pretrained LLMs with specific domain data. | Models are retrained periodically based on new data or performance degradation signals. |

| Ethical & Compliance Focus | Prioritizes fairness, transparency, and responsible AI due to the high public impact of generated outputs. | Ethical concerns depend on use case, mainly centered on data privacy and bias mitigation. |

| Deployment Challenges | Faces hurdles related to integration, inference cost, latency, and responsible output generation. | Challenges include automation silos, model reproducibility, and environment consistency. |

Read More: What Is MLops?

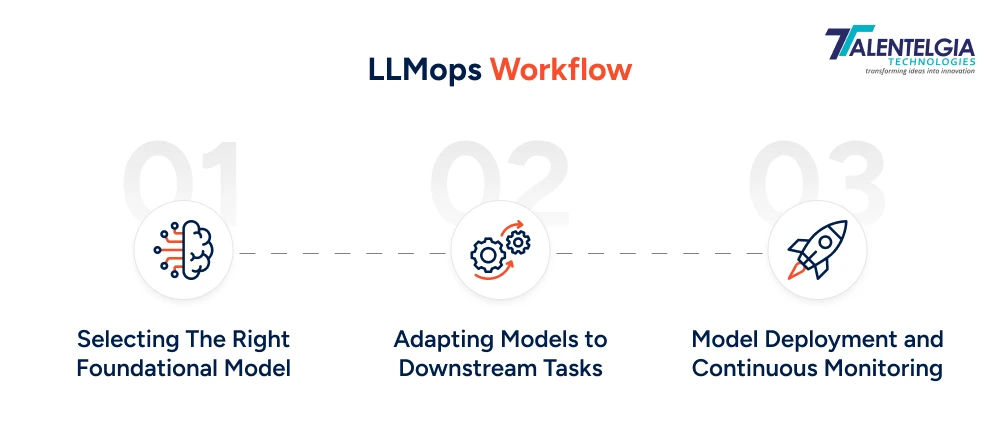

How Does LLMOps Work?

1. Selecting The Right Foundational Model

Each LLMOps process begins with selecting the correct foundation model, an LLM that’s already been trained on vast, varied datasets. Such models are computationally expensive to train from scratch. For example, Lambda Labs estimates that it would cost $4.6 million with a Tesla V100 setup to train GPT-3 (175 billion parameters), and take about 355 years, another illustration of this idea.

To control cost and get performance, a company generally can select yourselves or between open source and proprietary:

Proprietary Models (eg GPT-4 by OpenAI, Claude by Anthropic, Jurassic-2 by AI21 Labs) provide amazing performance and accuracy but are very expensive to use as an API service and lack the flexibility of custom training.

Implementations from Open-Source models and libraries (e.g., LLaMA, Flan-T5, GPT-Neo, Stable Diffusion or Pythia) are more cost-effective and easily adjustable for companies which desire control on the fine-tuning and deployment.

The decision is driven by the actual budget, compliance needs, model interpretability needs, scalability requirements and so on.

2. Adapting Models to Downstream Tasks

When the base model is selected, it needs to be finetuned for individual tasks. This phase guarantees that the model’s outputs are trustworthy, context-aware, and accurate for your application.

Several strategies are used by LLMOps teams to tweak the behavior of LLM:

- Prompt Engineering: Structured prompts to steer model responses well.

- Fine-Tuning: Training pre-trained models on a given domain.

- External Data Connecting: With API, Database or Embedding to feed real context data.

- Hallucination Control: Optimization of model predictions to reduce misinformation or fake news.

Also, while performance validation is evidenced by the metrics (e.g. accuracy on a validation dataset) in MLOps, LLMOps calls for ongoing refinement and actual-world testing. Teams leverage custom tools such as HoneyHive, HumanLoop, and model A/B testing frameworks in order to compare outputs from models over time, and measure output quality.

3. Model Deployment and Continuous Monitoring

The operational working of LLMs needs monitoring, and they need to be versioned as the models mature. Every time a new model (e.g., GPT-3. 5 towards GPT-4 may have an impact on the API’s behavior and response quality.

To establish coherence, LLMOps use monitoring and observability software like WhyLabs, HumanLoop, and Arize AI. These solutions help track:

- Output relevance and accuracy

- Model drift and hallucination frequency

- Latency, cost of staying, and system status

Through the ensemble of automated monitoring, feedback loops, and continuous retraining, LLMOps guarantees that deployed LLM-powered applications maintain accuracy, are compliant, and meet users’ expectations.

Quick Read: How To Create a LLM?

Conclusion

LLMOps plays a critical role in unlocking the full potential of large language models by combining automation, monitoring, and best practices from DevOps and MLOps. From selecting the right foundation model and fine-tuning it for downstream tasks to ensuring secure deployment, ethical AI, and continuous performance monitoring, LLMOps provides a structured framework for building reliable, scalable, and high-performing NLP applications and chatbots.

By adopting LLMOps, organizations can optimize models efficiently, reduce operational risks, maintain compliance, and deliver intelligent AI solutions that consistently meet business and user expectations.

Healthcare App Development Services

Healthcare App Development Services

Real Estate Web Development Services

Real Estate Web Development Services

E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce Development Company

Blockchain E-commerce Development Company

Fintech App Development Services

Fintech App Development Services Fintech Web Development

Fintech Web Development Blockchain Fintech Development Company

Blockchain Fintech Development Company

E-Learning App Development Services

E-Learning App Development Services

Restaurant App Development Company

Restaurant App Development Company

Mobile Game Development Company

Mobile Game Development Company

Travel App Development Company

Travel App Development Company

Automotive Web Design

Automotive Web Design

AI Traffic Management System

AI Traffic Management System

AI Inventory Management Software

AI Inventory Management Software

AI Software Development

AI Software Development  AI Development Company

AI Development Company  AI App Development Services

AI App Development Services  ChatGPT integration services

ChatGPT integration services  AI Integration Services

AI Integration Services  Generative AI Development Services

Generative AI Development Services  Natural Language Processing Company

Natural Language Processing Company Machine Learning Development

Machine Learning Development  Machine learning consulting services

Machine learning consulting services  Blockchain Development

Blockchain Development  Blockchain Software Development

Blockchain Software Development  Smart Contract Development Company

Smart Contract Development Company  NFT Marketplace Development Services

NFT Marketplace Development Services  Asset Tokenization Company

Asset Tokenization Company DeFi Wallet Development Company

DeFi Wallet Development Company Mobile App Development

Mobile App Development  IOS App Development

IOS App Development  Android App Development

Android App Development  Cross-Platform App Development

Cross-Platform App Development  Augmented Reality (AR) App Development

Augmented Reality (AR) App Development  Virtual Reality (VR) App Development

Virtual Reality (VR) App Development  Web App Development

Web App Development  SaaS App Development

SaaS App Development Flutter

Flutter  React Native

React Native  Swift (IOS)

Swift (IOS)  Kotlin (Android)

Kotlin (Android)  Mean Stack Development

Mean Stack Development  AngularJS Development

AngularJS Development  MongoDB Development

MongoDB Development  Nodejs Development

Nodejs Development  Database Development

Database Development Ruby on Rails Development

Ruby on Rails Development Expressjs Development

Expressjs Development  Full Stack Development

Full Stack Development  Web Development Services

Web Development Services  Laravel Development

Laravel Development  LAMP Development

LAMP Development  Custom PHP Development

Custom PHP Development  .Net Development

.Net Development  User Experience Design Services

User Experience Design Services  User Interface Design Services

User Interface Design Services  Automated Testing

Automated Testing  Manual Testing

Manual Testing  Digital Marketing Services

Digital Marketing Services

Ride-Sharing And Taxi Services

Ride-Sharing And Taxi Services Food Delivery Services

Food Delivery Services Grocery Delivery Services

Grocery Delivery Services Transportation And Logistics

Transportation And Logistics Car Wash App

Car Wash App Home Services App

Home Services App ERP Development Services

ERP Development Services CMS Development Services

CMS Development Services LMS Development

LMS Development CRM Development

CRM Development DevOps Development Services

DevOps Development Services AI Business Solutions

AI Business Solutions AI Cloud Solutions

AI Cloud Solutions AI Chatbot Development

AI Chatbot Development API Development

API Development Blockchain Product Development

Blockchain Product Development Cryptocurrency Wallet Development

Cryptocurrency Wallet Development About Talentelgia

About Talentelgia  Our Team

Our Team  Our Culture

Our Culture

Healthcare App Development Services

Healthcare App Development Services Real Estate Web Development Services

Real Estate Web Development Services E-Commerce App Development Services

E-Commerce App Development Services E-Commerce Web Development Services

E-Commerce Web Development Services Blockchain E-commerce

Development Company

Blockchain E-commerce

Development Company Fintech App Development Services

Fintech App Development Services Finance Web Development

Finance Web Development Blockchain Fintech

Development Company

Blockchain Fintech

Development Company E-Learning App Development Services

E-Learning App Development Services Restaurant App Development Company

Restaurant App Development Company Mobile Game Development Company

Mobile Game Development Company Travel App Development Company

Travel App Development Company Automotive Web Design

Automotive Web Design AI Traffic Management System

AI Traffic Management System AI Inventory Management Software

AI Inventory Management Software AI Software Development

AI Software Development AI Development Company

AI Development Company ChatGPT integration services

ChatGPT integration services AI Integration Services

AI Integration Services Machine Learning Development

Machine Learning Development Machine learning consulting services

Machine learning consulting services Blockchain Development

Blockchain Development Blockchain Software Development

Blockchain Software Development Smart contract development company

Smart contract development company NFT marketplace development services

NFT marketplace development services IOS App Development

IOS App Development Android App Development

Android App Development Cross-Platform App Development

Cross-Platform App Development Augmented Reality (AR) App

Development

Augmented Reality (AR) App

Development Virtual Reality (VR) App Development

Virtual Reality (VR) App Development Web App Development

Web App Development Flutter

Flutter React

Native

React

Native Swift

(IOS)

Swift

(IOS) Kotlin (Android)

Kotlin (Android) MEAN Stack Development

MEAN Stack Development AngularJS Development

AngularJS Development MongoDB Development

MongoDB Development Nodejs Development

Nodejs Development Database development services

Database development services Ruby on Rails Development services

Ruby on Rails Development services Expressjs Development

Expressjs Development Full Stack Development

Full Stack Development Web Development Services

Web Development Services Laravel Development

Laravel Development LAMP

Development

LAMP

Development Custom PHP Development

Custom PHP Development User Experience Design Services

User Experience Design Services User Interface Design Services

User Interface Design Services Automated Testing

Automated Testing Manual

Testing

Manual

Testing About Talentelgia

About Talentelgia Our Team

Our Team Our Culture

Our Culture

Write us on:

Write us on:  Business queries:

Business queries:  HR:

HR: